Rethinking Memory in the Age of ChatGPT

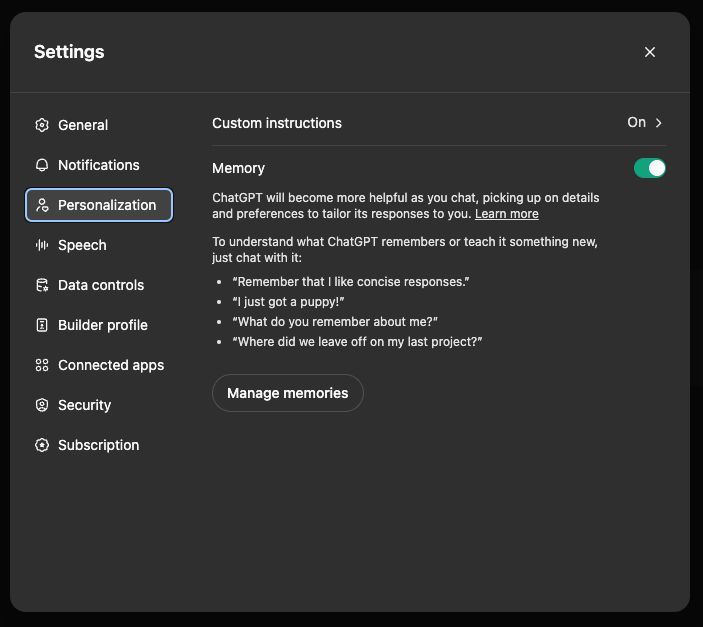

I've been using ChatGPT for a while now, and I have to say, it's been a game-changer for my work (until Olakai! more on that later). The ability to quickly generate responses to common questions and tasks has saved me so much time and effort. But, as I've been using it more and more, I've started to notice something that's both fascinating and frustrating - its "memory".

ChatGPT is amazing at remembering specific conversations and interactions, but it's also incredibly... personal. It seems to remember what I don't want it to remember - my obsession with Tiramisu, my love of 80s music, and even my favorite childhood memories. It's like having a digital therapist that knows all my deepest secrets.

But what's even more surprising is that it remembers the emotional nuances of our conversations. It can pick up on my tone, my sarcasm, and even my frustration. It's like it has a sixth sense for knowing exactly what to say to get under my skin. This is both impressive and unsettling. I'm not sure if I want my AI assistant to know so much about me. It's like having a digital shadow that follows me everywhere, knowing all my deepest secrets.

As we increasingly rely on AI to drive business decisions, it raises profound questions about memory at work: Who truly owns it? Is it the employees who input data, the AI systems that process it, or the company? How do we ensure that the knowledge transferred between humans and machines is meaningful and contextually rich? What happens to an employee’s GenAI memory when she/he leaves the company? How do we manage the emotional and personal aspects of memory in a way that respects individual privacy while enhancing collaboration?

This is a challenging problem to solve, requiring careful consideration of data governance, knowledge management, scalability, and human-AI collaboration - but also a clear definition and perhaps vision for what memory "is".